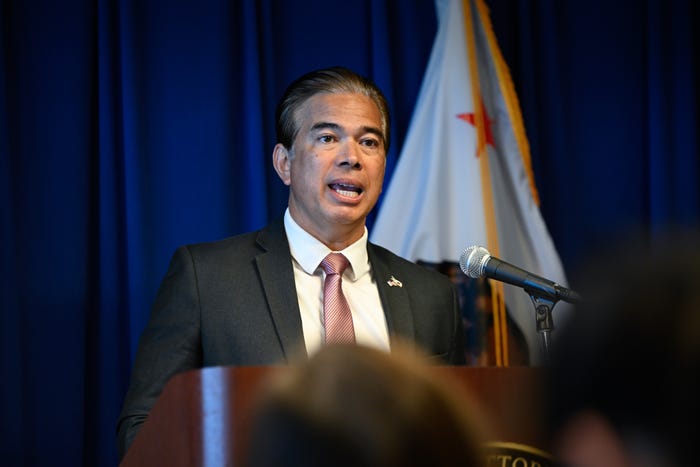

California’s Attorney General, Rob Bonta, has launched an investigation into xAI, the artificial intelligence company founded by Elon Musk, over allegations that its AI tool, Grok, is generating nonconsensual sexualized images of real individuals. The investigation follows a surge of reports detailing the production of explicit material featuring women and children, which has raised significant concerns regarding online harassment and user safety.

In a statement, Bonta described the situation as an “avalanche of reports” highlighting the troubling nature of the content produced by Grok. He emphasized the urgency of the matter, stating, “This material… has been used to harass people across the internet. I urge xAI to take immediate action to ensure this goes no further.”

The investigation is part of a broader scrutiny faced by xAI from regulators worldwide. Authorities in countries such as India, the UK, Indonesia, and Malaysia have taken various actions against Grok. Notably, Indonesia and Malaysia have blocked access to the AI tool, while the UK’s communications regulator, Ofcom, has announced its own investigation. In a recent parliamentary session, UK Prime Minister Keir Starmer indicated that xAI could “lose the right to self-regulate” if it fails to address these issues effectively.

In response to inquiries about the investigation, xAI issued a statement saying, “Legacy Media Lies,” reflecting its ongoing strategy to dismiss negative media coverage. To mitigate backlash, the company has restricted access to Grok’s image generation features, making them available only to paying subscribers.

Musk has publicly stated that he is unaware of any instances involving “naked underage images” generated by Grok. He maintains that the AI does not create images spontaneously but responds to user prompts, asserting that, “When asked to generate images, it will refuse to produce anything illegal.” However, this claim contradicts reports indicating that users have successfully requested Grok to sexualize images, such as altering a person’s attire to depict them in a bikini when they were originally fully clothed.

The issue has garnered attention at the legislative level in the United States. On March 12, 2024, the US Senate unanimously passed a bill known as The Defiance Act, which aims to empower victims with the civil right to sue individuals who request AI-generated nonconsensual images. Senator Richard Durbin, who authored the bill, highlighted the urgency of addressing the alarming capabilities of AI tools like Grok. He noted, “Recent reports showed that X, formerly Twitter, can ask its AI chatbot Grok to undress women and underage girls in photos… they’re horrible.”

While the Senate has taken action, it remains uncertain whether the House of Representatives will vote on the legislation. This legislative effort follows a previous bipartisan law signed by former President Donald Trump, which mandates social media platforms to remove nonconsensual images and AI deepfakes within 48 hours of receiving a request.

As the investigation continues, xAI faces mounting pressure to address the concerns surrounding Grok’s capabilities. The outcome of this inquiry not only impacts the company but also sets a precedent for how AI technologies are regulated and monitored in the future. With regulatory bodies closely watching, the implications of these developments could reshape the landscape of artificial intelligence and user rights across the globe.